This week's network drive work was good prep for the future of Tritium.

Those exaggerated load times were surprising on a network drive, but they're to be anticipated going across the wire interacting with a cloud service.

Enter iManage cloud.

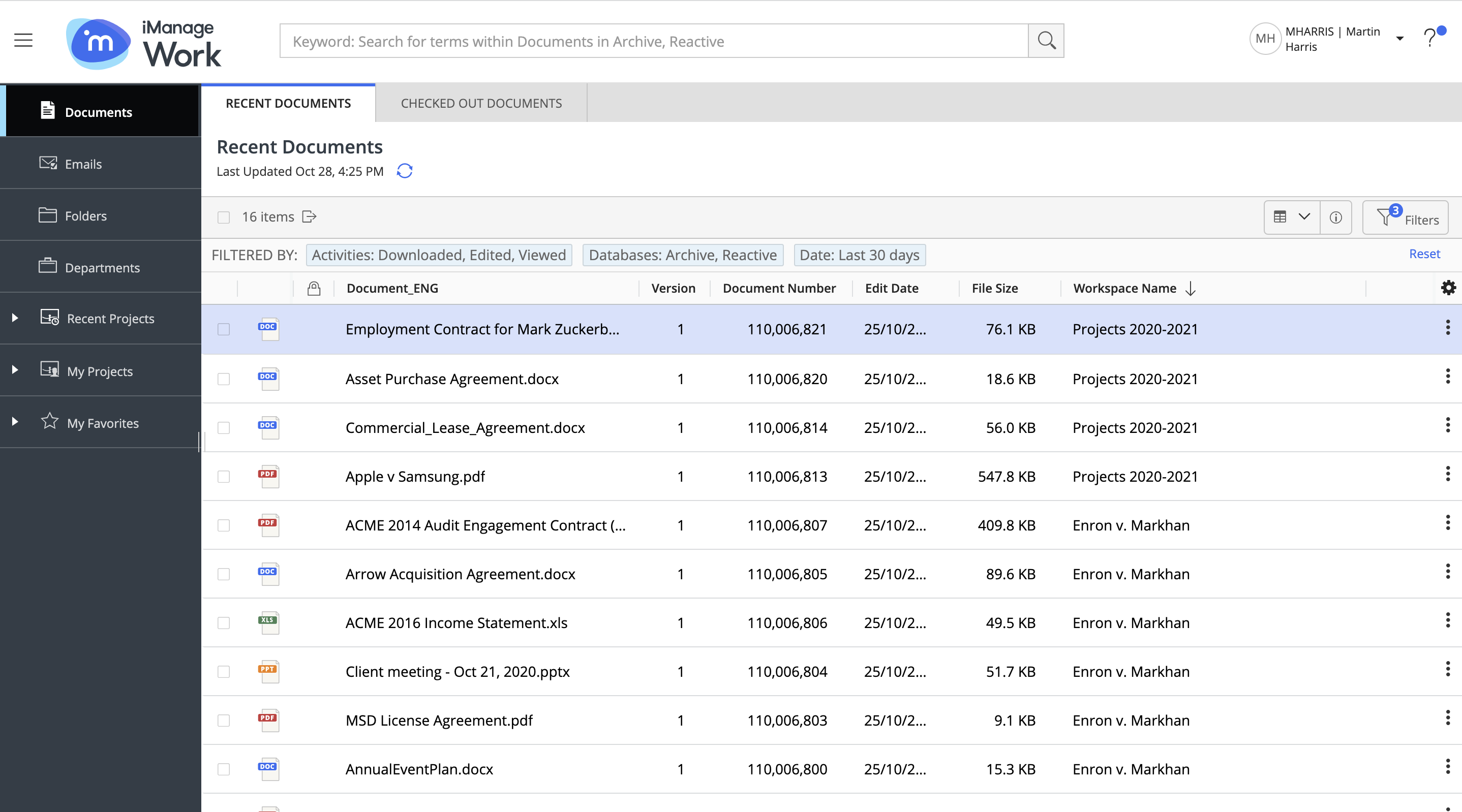

In big legal tech, interacting with the document management system is table stakes. That game is mostly dominated in BigLaw by a company called iManage. Fortunately for Tritium, iManage has approved our partnership, and Tritium will be integrating with iManage over the next few weeks.

Though not always, iManage is often a cloud service today.

So that brings us back to working with high network latency. Since I don't have the iManage documents yet, I'll need to simulate that environment. Luckily, our network drive considerations from earlier make a nice laggy network proxy to get us started on parallelism.

A test harness looks like the following. We setup a Connection which starts a Server in

a separate thread and maintains a two-way communication channel with it. Then we list the files in a laggy

network directory, set a clock, then request the Server initialize each of those files. Then we check our time.

We'll just test with 13 small to medium-sized docx files for the moment.

#[test] fn rtt_network_slurp() -> Result {

let mut conn = crate::server::Connection::new();

conn.start_server();

let dir = std::fs::read_dir("Z:\\").unwrap();

let mut responses = 0;

let start = std::time::Instant::now();

for entry in dir {

responses += 1;

conn.sender

.send(crate::server::Request::Initialize((

entry.path().cloned().unwrap(),

None, // if this is None, the server reads from the path.

false, // do we want to show the file in the UI once loaded?

)))

.unwrap();

OK }

loop {

if let Ok(..) = conn.receiver.try_recv() {

responses -= 1;

}

if responses == 0 {

break;

}

}

println!("done in: {}ms", start.elapsed().as_millis());

}

// done in: 31936ms

The naive version of this is, again, surprisingly slow. The 50ms jitter causes the Server to spend

about 31 seconds initializing the files. That's because each file is read individually from the network drive

which is subject to something like 1700ms of network protocol overhead as discovered previously.

Now, what if we put those read calls on a tokio::runtime::Runtime and run them in parallel,

multi-threaded?

If we shift the work into a single separate thread with tokio, we get the following result:

// done in: 4397msAbout an 8x speedup. We'll take it.

We can ship that and also leverage that parallelism in the iManage integration.